Autonomous Coding Agents: Fire and Forget

Autonomous coding agents let you fire off coding tasks and walk away. Safe YOLO mode changes everything.

Willison Causing Trouble Again

This post from Simon Willison hit my radar yesterday morning and it struck a chord with me. AI coding tools are a research sweet spot for me–that is, I try very hard to stay up. I’ve been working with the same cloud-based agents from OpenAI and Anthropic that are the subject of Willison’s post.

What to Call These Things?

Willison uses the term “asynchronous coding agents” for the category that encompasses OpenAI’s Codex Cloud and Anthropic’s Claude Code On The web. “Asynchronous” didn’t seem ideal to me, so I decided to check in with my friend Opus to see what he knew about the topic. Turns out that Opus 4.1 has a very solid understanding of the concept and how its being described. Opus and I prefer autonomous to asynchronous, so: “autonomous coding agents.”

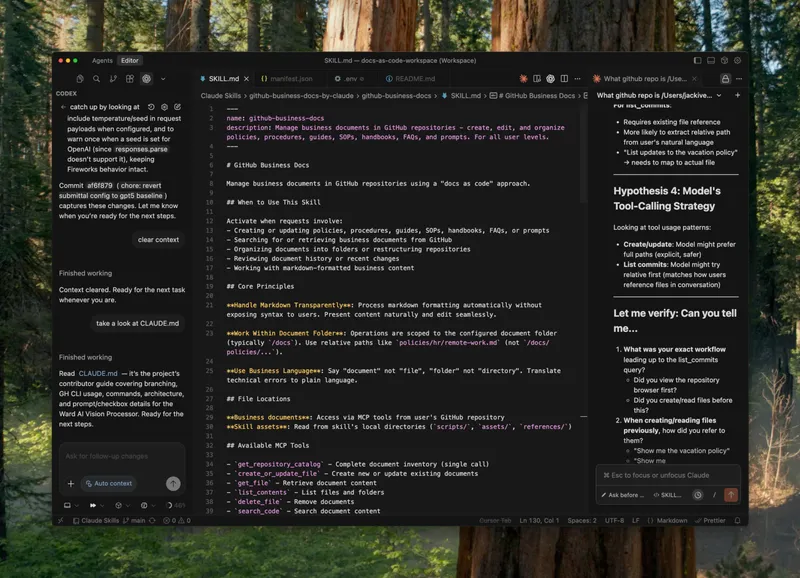

AI Conversation Transcript: Claude.ai

Background: The Coding Wars

Anthropic has invested heavily in the AI coding space. Its langauge models surprised many of us by jumping ahead of OpenAI in this space when Claude Sonnet 3.51 launched in in June 2024. More surprisingly, Anthropic stayed ahead, progressing rapidly through Sonnet 3.7, 4, and most recently Sonnet 4.5 just over a month ago. I have used Anthropic coding models almost exclusively since 3.5 arrived.

Earlier this year, Anthropic jumped deeper into the fray with an actual coding tool, the minimalist coding agent Claude Code, whose motto might be:

Get out of the way, coding tools, and let our great models do their thing.

Not long after Claude Code arrived, it supplanted Cursor2 as my primary AI coding interface.

So where was OpenAI, the biggest AI player of all? Since Sonnet 3.5 arrived: playing catch-up. I had a lot of respect for OpenAI models generally, for ChatGPT as a product, and for the robustness of their APIs and inference services. But coding belonged to Anthropic.

Not to say that OpenAI was standing still. Their release of GPT-5 this August was huge, clearly a substantial step forward, and some were saying GPT-5 rivaled Anthropic’s coding models. OpenAI then released a coding-tuned model, GPT-5 Codex, in mid-September. And OpenAI had, in a series of bizarre fits and starts, released its own minimalist coding agent, Codex CLI. But to me, this all felt like catch-up: just we-try-to-do-everything posturing from OpenAI.

Coding Agent Autonomy Changes the Game

What drove me to reconsider Codex was an ongoing stream of podcast and YouTube interviews where some person in OpenAI leadership (including president Greg Brockman!) would be interviewed about some topic other, but the conversation would drift over to Codex along the way, and we’d hear how OpenAI was dogfooding Codex internally to build OpenAI’s own products. Here’s an example from OpenAI DevDay on October 7th, where Sherwin Wu and Christina Huang of the OpenAI Platform Team get onto the subject of Codex:

What jumped out at me was that OpenAI’s core internal teams were using Codex heavily, in autonomous agent mode; fire-and-forget. They told stories about going into meetings, and being asked to “give me a couple minutes here …” so people could fire off a few Codex Cloud cruise missles that would grind away during the meeting, and have results waiting for them afterwards.

Experiencing a series of legit, unstaged anecdotes like this—I’m pretty good at smelling “marketing message” and these definitely weren’t—my attitude about Codex went from “meh” to “NEED TO INSTALL ASAP.” Before I got to Codex Cloud, though, Anthropic jumped the line and launched their ACA, “Claude Code On The Web”3. Since I was in deep with Claude Code already, getting its web counterpart set up was low effort, and CCOTW ended up as my first Autonomous Coding Agent. Codex Cloud followed soon after.

Fire-and-forget That Works

In OG Claude Code, you can already fire-and-forget. It’s called YOLO mode. You turn off all protections, live dangerously, and let Claude do whatever it chooses to, which in a command line or terminal interface, is pretty much anything. Nuke the system drive? Sure thing. Wipe the GitHub repo? Yep. Shut down your AWS cloud? No problem. Claude Sonnet 4.5 is an ethical AI-person, better than most, but YOLO mode is dangerous and developers avoid it most of the time.

We mostly do our core work in non-YOLO mode, which involves carefully watching what the agent is doing, and many many approvals of the agent’s polite requests, “can I use this potentially dangerous tool, please?” I get a lot of of work done when I pair with Claude Code, but I’m close to 100% occupied keeping tabs on the model while I’m pairing.

What OpenAI and Anthropic really did with ACAs is deliver a reasonably safe YOLO mode, by equiping their agents with reasonably secure environments that the agent can go to town in, without risking much. “Go to town” means “run whatever you want, safely” – usually called sandboxing – but also “code whatever you want, safely – you can’t break anything.” This works because the agent does its work in its own personal code space, kept safely separate from the current codebase and other work-in-process. Nothing there impacts our real code, until we’ve had a chance to carefully look at it and let it in (to be merged).

Well-equipped Environments, Well-defined Specs

Willison’s piece dragged me the rest of the way into ACAs; I did the research and set up my first custom Codex Cloud environment just today. Until now, I’ve been living with Codex’s default setup with no customization and conservative default protections.

The actual focus of Willison’s post is using autonomous coding agents for coding research projects, and he walks us through several interesting real-life examples. I like his suggestion to use a separate Git repo for these projects—one more layer of “don’t worry, you can’t break anything.”

The human role in this autonomous new world? Clear, well-thought-out specs that precisely communicate what the agent should build for you. There’s nothing preventing you from having 10 or 20 agents working away—except 10 or 20 good, clear specs.

Footnotes

I do know that Anthropic called it Claude 3.5 Sonnet. In this case, I choose “avoid confusion and awkwardness.” ↩

Well, I didn’t abandon Cursor exactly. My current insane, wonderful dev setup looks like this: container app: Cursor; left pane: Codex CLI; center pane: source files; right pane: Claude Code ↩

It’s hilarious how bad both OpenAI and Anthropic are with product names ↩