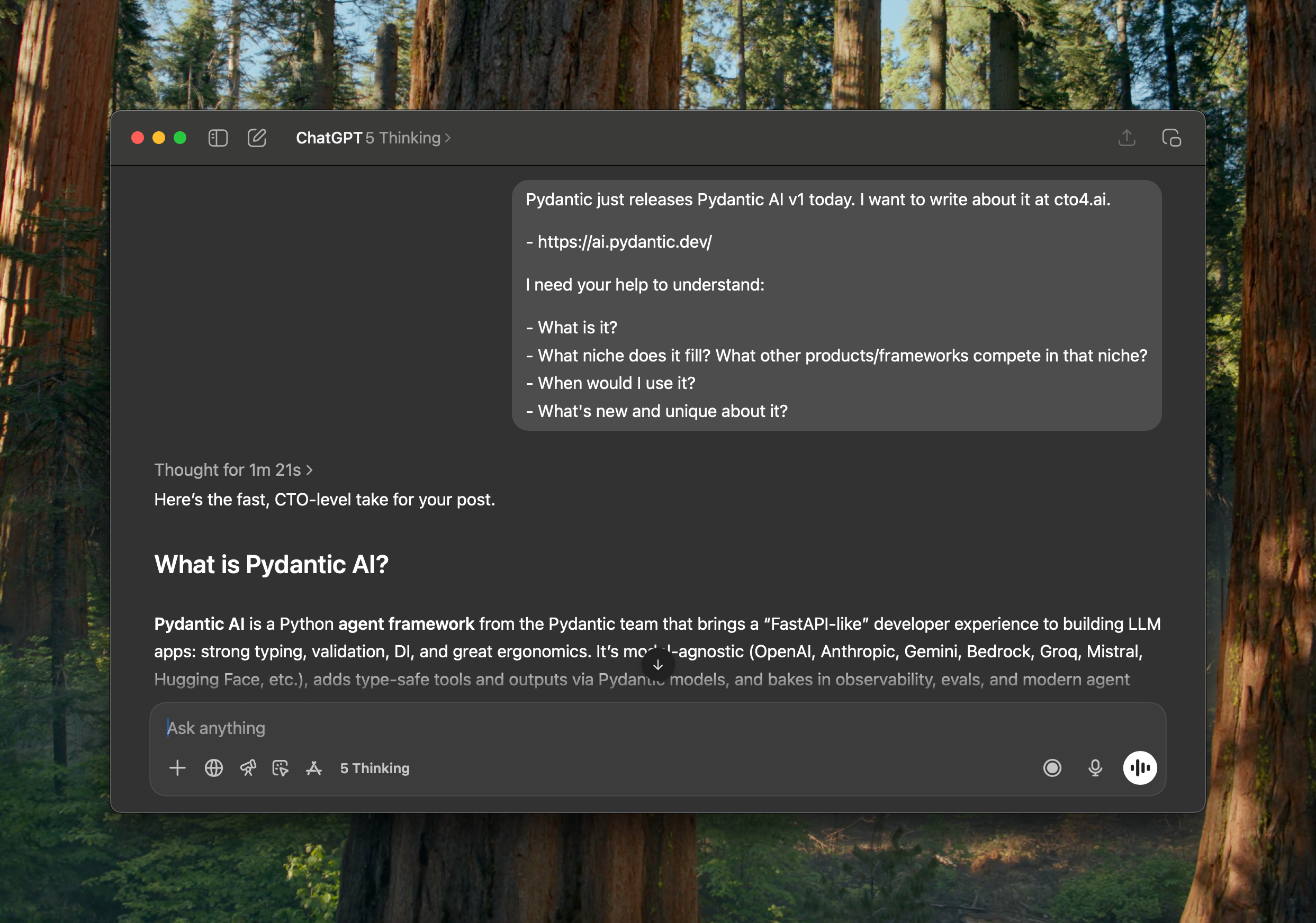

ChatGPT 5 Thinking Is So Damn Smart

I needed to round out my knowledge of Pydantic AI. ChatGPT 5 Thinking absolutely nailed it.

I spent some time at the Pydantic booth at the AI Engineer World’s Fair in SF this June. Today, I got their announcement that Pydantic AI v1 is out, and decided to write about it.

I had Grand Canyon sized gaps in my knowledge about Pydantic AI, though, and decided to ask ChatGPT 5 Thinking to help me get up to speed.

NAILED IT. Here’s the chat transcript.

AI Conversation Transcript: ChatGPT 5 Thinking

Pydantic just releases Pydantic AI v1 today. I want to write about it at cto4.ai.

- https://ai.pydantic.dev/

- What is it?

- What niche does it fill? What other products/frameworks compete in that niche?

- When would I use it?

- What's new and unique about it?

Here’s the fast, CTO-level take for your post.

# What is Pydantic AI? Pydantic AI is a Python agent framework from the Pydantic team that brings a “FastAPI-like” developer experience to building LLM apps: strong typing, validation, DI, and great ergonomics. It’s model-agnostic (OpenAI, Anthropic, Gemini, Bedrock, Groq, Mistral, Hugging Face, etc.), adds type-safe tools and outputs via Pydantic models, and bakes in observability, evals, and modern agent standards.

Key pillars called out in the docs:

- Type-safe agents, tools & outputs (Pydantic models for both tool inputs and final outputs).

- Dependency Injection (FastAPI-style) for passing state/resources into tools and instructions.

- Observability via Pydantic Logfire (OpenTelemetry based) for traces, spans, prompts, costs.

- Standards integrations: MCP (Model Context Protocol), AG-UI (agent↔UI streaming), A2A (agent↔agent).

- Durable execution (long-running/human-in-the-loop) with Temporal/DBOS options.

- Graph workflows and streamed structured outputs.

Nearby alternatives:

- LangChain / LangGraph – giant ecosystem for agents, tools, and graph workflows; widely adopted but less opinionated on strict typing/DI.

- LlamaIndex – agent + data/knowledge tooling; strong on RAG and workflows; has multi-agent frameworks.

- Microsoft Semantic Kernel – model-agnostic SDK for building agents (C#, Python, Java), enterprise-tilted.

- DSPy – “programming, not prompting”; declarative pipelines + auto-optimization; complements or replaces parts of agent orchestration.

# When would you use it?

- You want typed, validated outputs (e.g., an enum/status + payload) and typed tool inputs—and you want the model to retry until the schema passes.

- Your stack is Python + FastAPI/Pydantic already, and you want dependency-injected state/resources inside tools/instructions (db conns, user/session, feature flags).

- You need multi-provider agility (OpenAI today, Gemini or DeepSeek/HF tomorrow) without a rewrite.

- You care about prod-grade traces/evals/costs from day one (Logfire + OTel).

- Your UI needs live streaming with tool calls and shared state (AG-UI), or your estate already uses MCP tools/servers.

- You’re building durable or multi-step flows (human approvals, retries, long tasks) or graph workflows that stay readable.

- API Stability Promise. v1 commits to stability (no breaking changes for a defined window) so teams can adopt with confidence.

- Ecosystem maturity. Official docs now cover providers (incl. Hugging Face Inference Providers), AG-UI integration, MCP client/server & sampling, tool approvals, durable exec, graphs, and Pydantic Evals—all in one place.

- Observability first. Deep Logfire hooks with OTel semantics for GenAI—debug back-and-forth prompts, tool calls, and HTTP layer when needed.

- Standards-forward. Out-of-the-box AG-UI for frontend streaming/state; MCP to plug in external tools and even route model calls through MCP sampling if you want.

- Angle: “FastAPI for Agents.” Open with the pain (flaky schemas, hidden tool errors, weak obs), then show how typed tools/outputs + DI + Logfire give you *write-time safety* and *run-time visibility*. Demo a tiny agent returning a

BaseModeland a tool with a docstring description; show a Logfire trace screenshot and one AG-UI code stanza. - Compare section: Map Pydantic AI vs LangChain/LangGraph, LlamaIndex, SK, DSPy in a small table (typing/DI, observability, RAG/data tooling, graph/multi-agent, optimization/evals).

- Production checklist: providers, retries, approvals, evals, OTel/export, durable exec, testability via typed deps.

See if you can find any podcast or youtube appearances in the past 60-90 days featuring one of the core Pydantic team talking about Pydantic AI

Here are good, recent hits (within ~60–90 days) where a core Pydantic team member talks about Pydantic AI:

- Podcast — Software Engineering Radio #676 (Jul 10, 2025): Samuel Colvin (Pydantic founder/CEO) discusses the Pydantic ecosystem, explicitly including Pydantic AI and Logfire—design goals, how to build/test/evaluate agents, and refactors behind the scenes.

- YouTube — “Pydantic AI — MCP Sampling” (≈2 months ago): Short demo on the Pydantic channel showing MCP Sampling with Pydantic AI as both MCP client and server—very relevant to toolcalling/orchestration stories.

- YouTube — “Human-seeded Evals — Samuel Colvin, Pydantic” (published late July 2025): Colvin’s AI Engineer World’s Fair talk; covers Pydantic’s approach to type-safe agents, evals, and observability; ties directly back to Pydantic AI patterns. (StartupHub’s write-up pegs the video to Jul 25, 2025.)

OK, take all of this and make either a markdown file or a canvas that can be saved as markdown. Please include all source links.

All set! I dropped everything into a canvas you can save as Markdown: “Pydantic AI v1 — CTO Field Guide (cto4.ai draft)”. It includes your requested sections plus every source link inline.

Key sources used (for your reference): Pydantic AI docs/homepage · V1 announcement (2025-09-04) · API-stability policy & changelog · Logfire/OTel integration · MCP overview · AG-UI protocol · Durable execution overview/Temporal/DBOS · Model/provider support overview · GitHub releases/PyPI/versioning .

Competitor docs: LangGraph · LlamaIndex Agents · Semantic Kernel (Agent Framework + overview) · DSPy (site + GitHub) · CrewAI Agents .

Recent media (past ~60–90 days): SE Radio #676 (Samuel Colvin) · “Pydantic AI — MCP Sampling” (YouTube) · “MCP is all you need — Samuel Colvin” (AI Engineer World’s Fair) .

Want me to tweak the tone for cto4.ai (e.g., more opinionated, add a code snippet, or a quick comparison graphic)?

Can you save a transcript of this conversation for me?

(You are here.)

Note about canvas: A canvas titled “Pydantic AI v1 — CTO Field Guide (cto4.ai draft)” was created during this conversation; its full content is not duplicated here. You can export/save it directly from the canvas.

Two notes:

- The idea of having a component to render chat transcripts inside blog posts was stolen from Orta Therox. I vibe coded mine in Claude Code.

- ChatGPT 5 Thinking believed it was writing the blog post itself. It was not; I’ll be doing that by hand, thank you very much.